In today’s digital economy, data is the foundation of successful business operations. However, with data pouring in from countless sources — sales platforms, marketing systems, customer service channels, and more — many organizations struggle with fragmented, inconsistent, or outdated information. This is where Master Data Management (MDM) comes in, and Reltio is a leading player in this space.

Reltio Master Data Management is a modern, cloud-native MDM platform designed to help enterprises consolidate, cleanse, and unify their critical data assets. By creating a single, trusted source of truth, Reltio enables businesses to drive better decisions, improve customer experiences, and enhance compliance.

A Brief History of Reltio

Reltio was founded in 2011 by Manish Sood, a data industry veteran who saw the limitations of legacy MDM systems firsthand. With its headquarters in Redwood City, California, Reltio set out to build a next-generation MDM platform, designed for the cloud and for the demands of modern data-driven enterprises.

Since then, Reltio has grown rapidly, attracting investment from major venture capital firms and building a customer base across Fortune 500 organizations in healthcare, life sciences, financial services, retail, and other sectors. Its platform is recognized by industry analysts (such as Gartner and Forrester) for its innovation, scalability, and business value.

Why Reltio?

Unlike traditional on-premise MDM solutions, Reltio offers a cloud-first, API-driven architecture that supports real-time data processing and integration. Here are some standout features that make Reltio a compelling choice:

- Multi-Domain MDM: Manage customer, product, supplier, and location data in one place

- Cloud-native & scalable: Handles high-volume, high-velocity data seamlessly

- Data quality & governance: Cleansing, validation, survivorship, lineage tracking

- Graph technology: Discover and leverage entity relationships with connected graph models

- API-first & real-time: Modern integration to power digital ecosystems

Detailed Business Use Cases with Attributes

Let’s look at some practical business use cases where Reltio is especially valuable, with examples of typical attributes managed in each:

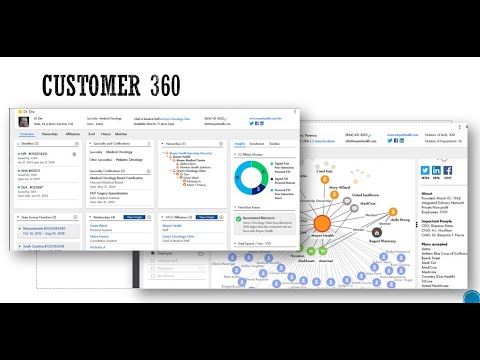

1️⃣ Customer 360 for Financial Services

Use Case: A bank needs to create a unified customer profile to improve onboarding, risk assessment, and personalized product offerings.

Typical attributes managed:

-

Name

-

Address

-

Social Security Number / National ID

-

Date of Birth

-

Contact numbers

-

Email addresses

-

Account numbers

-

KYC documents

-

Risk rating

-

Credit score

-

Relationships to other customers or accounts (beneficiaries, joint account holders)

Business value:

-

Improved compliance (KYC/AML)

-

Better fraud detection

-

Personalized cross-selling opportunities

2️⃣ Product 360 for Retail & E-commerce

Use Case: A global retailer needs a single view of its products across all sales channels, to drive consistency in pricing, promotions, and supply chain.

Typical attributes managed:

-

SKU

-

Product name

-

Description

-

Brand

-

Price

-

Categories

-

Product images

-

Inventory levels

-

Supplier details

-

Related products / bundles

Business value:

-

Faster time-to-market for new products

-

Accurate inventory planning

-

Seamless omnichannel experience

3️⃣ Healthcare Provider 360

Use Case: A healthcare network needs to manage consistent information about its providers (doctors, specialists, clinics) to streamline referrals and claims processing.

Typical attributes managed:

-

Provider name

-

NPI (National Provider Identifier)

-

Specialty

-

License details

-

Affiliated hospitals

-

Availability

-

Contact information

-

Insurance acceptance

-

Certifications

Business value:

-

Reduced claim rejections

-

Improved care coordination

-

Enhanced provider search tools for patients

4️⃣ Supplier 360 for Manufacturing

Use Case: A manufacturer wants to manage supplier information globally to optimize procurement, quality, and compliance.

Typical attributes managed:

-

Supplier name

-

Tax ID

-

Supplier location

-

Product categories supplied

-

Pricing agreements

-

Contracts

-

Quality certifications

-

Risk assessments

-

Relationship hierarchy (parent/subsidiary)

Business value:

-

Reduced supplier risk

-

Consolidated spend

-

Better contract compliance

Typical Industries Benefiting from Reltio

- Retail & E-commerce — better product and customer data for omnichannel

- Financial Services — single customer view for compliance and fraud

- Healthcare — provider and patient data management

- Life Sciences — compliance and product data governance

- Manufacturing — supplier and product data optimization

Conclusion

Master Data Management is no longer a “nice-to-have” — it’s a business imperative. Reltio’s modern, flexible, and scalable approach helps enterprises build a trustworthy data foundation to thrive in the digital era.

With its rich history of innovation, strong multi-domain capabilities, and focus on real-time, API-driven architecture, Reltio is well positioned to support modern businesses as they navigate increasingly complex data challenges.

If you’re exploring a future-ready MDM solution to unify and unleash the power of your data, Reltio is absolutely worth a closer look.