Are you looking for information about Informatica Master Data Management Architecture? Would you be also interested in knowing what components involved? If so, then you reached the right place, in this article we will explore Informatica MDM Architecture in detail. We will also understand what upstream and downstream systems are involved.

MDM Architecture Overview

As we know, Master Data Management i.e. MDM is a solution for mastering business information. MDM involves several processes with the help of which we can achieve uniformity, accuracy, and consistency in the business data. Such business-critical data can be used for better process management and for achieving the organization's goals. With the help of the MDM solution, we can carry out the data governance practices very effectively.

If we look at the big picture of MDM architecture, we can see, there are basically three layers. The first layer is the source systems, the second layer is MDM implementation and the third layer is consumption.

The source system layer includes operational systems which maintain 3rd party data. This layer may include multiple sources with different platforms such as Siebel, Oracle, SAP, Acxiom or D and B. The data from source systems will not be pushed to MDM layer directly. In order to push data from source system to MDM layer, we normally use ETL layer (Here ETL stands for Extract, Transport, and Load). This data push may happen in the batch mode or real-time mode or near real-time mode.

Once data is entered to MDM landing tables, first data cleansing will happen. Data standardization rules will be applied to enrich the business data. Cleansed and standardized data will be loaded into staging tables. To achieve data integrity constraints are enforced to the Base Object table while loading data from staging table to base object table. This is not the end of the process. Actual processing work will start after this. Even though the cleansed and standardized records are loaded in the MDM system, there will be duplicate and fuzzy records in the system. The next processes i.e. match process will identify such records based on the business criteria and rules developed during the data quality analysis phase. These duplicate records will be consolidated to make a golden copy of records. The golden copies of records may hold relations among them e.g. Manager and Employee relationships or Organization and Branch relationships etc. These relationships can also be maintained in the MDM system in the form of hierarchies.

The data stewardship will help to keep a golden copy of records in its consistency state and enforce controls on create and update processes through the user interface which comes with Informatica MDM product. e.g. Informatica Data Director or Customer 360 application.

All these MDM features such data modeling, data quality, identifying duplicates, consolidating records, maintaining hierarchies and workflows will not be compromised over the security, hence MDM also comes with role based in build security. However, if it is required, we can integrate the organization's existing security features such as LDAP security for authentication. However, authorization of MDM components needs to be happening in the MDM hub as per role-based mechanism. One of the great thing about Informatica MDM is it keeps MDM configurations in synch with the help of metadata.

Okay, we created golden of records in the MDM hub. What we do with this data? Thanks for asking that question, actually, after the successful implementation of MDM solution, the golden copies of records will be available to the consumer to consume. There could be a third party application which can consume data directly from MDM. However, in most of the cases, the data will be pushed from MDM to these consuming system through ETL layer as like data loading from source systems to MDM. It could be the batch mode, real-time or near real-time. There are few other types of systems such as analytical or reporting systems which consumes data for different purposes. The analytical consuming systems such as Data warehouse, Data marts or Portal dashboard will use these golden records to analyze the data and comes better organization growth plans. On the other hand, reporting consuming systems such as business intelligence or corporate performance management will help to produce the report to achieve effectiveness in business processes and to achieve business goals.

MDM Architecture - Deep Dive

We got a basic idea where Informatica MDM fits in the enterprise application. Now is the time to deep dive into the MDM system architecture. Informatica MDM three major components and those are Hub store, MDM hub and Services Integration Framework.

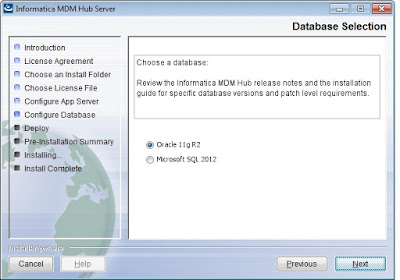

The Hub Store is a database component where business data is stored and consolidated. Hub store is based on underlyng database which can be Microsoft SQL Server, Oracle or DB2. It contains information about all of the databases that are part of your Informatica MDM Hub implementation. It has two parts, one is Master Database and second is Operational Reference Store, also known as ORS. We will use ORS term quite often during our lectures as well as during real time MDM implementation.

What is this Master Database component? Master Database is a database schema which maintains most critical configuration details of Informatica MDM hub. It includes user accounts created using MDM hub users section. Security configuration such as username and encrypted passwords for database schema users and application users. Master data maintains registry for Operational reference Store. e.g. If you register 3 ORS then those 3 entries will be present in Master Database. The default name of the Master Database is CMX_SYSTEM. Normal practice we use term CMX_SYSTEM quite often as like ORS.

We know Registering Database, Creating Users, providing tool access, overview of message queue and security provider. Where these configurations are maintained? Yes, you are right, this information is also persisted in the master database. The Master Database stores the connection settings and properties for each Operational Reference Store which are registered through MDM hub. In other words, we can access and manage multiple Operational Reference Stores from one Master Database.

Important thing to remember is for a given Informatica MDM Hub environment we can have only one Master Database. i.e. only one CMX_SYSTEM for one MDM environment.

Okay, if master database maintains configuration details then where business data is stored? I am glad you asked that question. The answer is, business data is stored in Operational reference store. Lets understand what else Operational Reference Store contains. Along with business data, ORS also maintains the rules for processing the master data. If you remember, about match columns, matchrule sets, all such rules are stored in the ORS. It also stores additional information such as BVT, Tokens, data leaniage along with history. It has Repository tables which start with C_REPOS and Repository archive table which starts with C_REPAR which holds all this information. Do you want to know what is default name of ORS? It is CMX_ORS, but you can name whatever you like because at the end it is database schema name. Unlike Master Database there is no restriction on number of ORS in a given MDM hub environment. But if we configure more ORS in the MDM hub it will adversely impact your MDM env, so use it wisely.

Important thing to remember about ORS is, we cannot associated a single Operational Reference Store with multiple Master Databases. The Master Database also stores site-level information, such as the number of incorrect log-in attempts allowed before a user account is locked out.

Next important component in the MDM architecture is application server. Informatica MDM supports three application servers and those are JBOSS, Weblogic and Webshphere. Irrespective of what kind of application server you are using, the components which get installed on these servers will remain same. We normally install Process Server and Hub Server on the application server. Lets understand little more about Process server. It is java code (to be specific a Java servlet) that cleanses the data and also processes batch jobs. Prior to MDM 9.7, we used to call it as cleanse server instead of process server. Why? because only cleansing used happen on cleanse server. But now, both data cleansing and batch job processing happens on Process server and hence the name. We can configure mutliple process server for better performance. Apart from that, we can configure configure process server in 3 different modes and those are Batch mode, online mode and the Batch and Online mode. We can choose the mode as per our business need. On other hand, Hub Server is used for core and common services which includes security, access and session management.